Plantinghealth

FollowOverview

-

Founded Date octubre 27, 1963

-

Sectors Agronegocios

-

Posted Jobs 0

-

Viewed 29

Company Description

If there’s Intelligent Life out There

Optimizing LLMs to be proficient at specific tests backfires on Meta, Stability.

-.

-.

-.

-.

-.

-.

–

When you buy through links on our website, we may earn an affiliate commission. Here’s how it works.

Hugging Face has launched its 2nd LLM leaderboard to rank the very best language designs it has evaluated. The new leaderboard seeks to be a more challenging consistent standard for checking open big language model (LLM) performance throughout a range of jobs. Alibaba’s Qwen models appear dominant in the leaderboard’s inaugural rankings, taking three spots in the top 10.

Pumped to announce the brand name brand-new open LLM leaderboard. We burned 300 H100 to re-run new examinations like MMLU-pro for all major open LLMs!Some knowing:- Qwen 72B is the king and Chinese open models are controling total- Previous examinations have actually become too easy for current … June 26, 2024

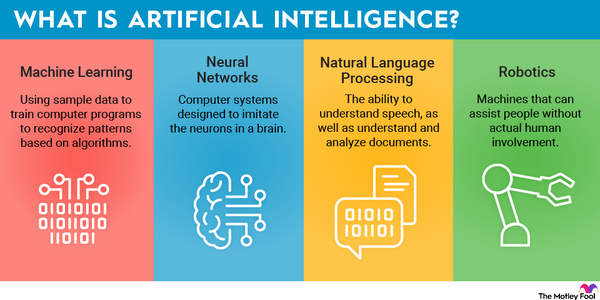

Hugging Face’s 2nd leaderboard tests language models across four tasks: knowledge screening, thinking on very long contexts, complicated mathematics abilities, and instruction following. Six criteria are utilized to test these qualities, with tests including fixing 1,000-word murder secrets, explaining PhD-level concerns in layman’s terms, and cadizpedia.wikanda.es the majority of difficult of all: high-school math formulas. A full breakdown of the standards utilized can be found on Hugging Face’s blog site.

The frontrunner of the new leaderboard is Qwen, Alibaba’s LLM, which takes first, 3rd, and 10th place with its handful of versions. Also revealing up are Llama3-70B, championsleage.review Meta’s LLM, and a handful of smaller sized open-source jobs that handled to outperform the pack. Notably missing is any sign of ChatGPT; Hugging Face’s leaderboard does not test closed-source models to make sure reproducibility of results.

Tests to qualify on the leaderboard are run solely on Hugging Face’s own computers, which according to CEO Clem Delangue’s Twitter, are powered by 300 Nvidia H100 GPUs. Because of Hugging Face’s open-source and collaborative nature, anyone is free to submit brand-new models for testing and admission on the leaderboard, with a brand-new voting system focusing on popular brand-new entries for screening. The leaderboard can be filtered to reveal only a highlighted variety of considerable designs to avoid a complicated excess of small LLMs.

As a pillar of the LLM area, Hugging Face has ended up being a trusted source for LLM learning and neighborhood partnership. After its first leaderboard was launched in 2015 as a method to compare and replicate testing results from numerous recognized LLMs, the board rapidly removed in appeal. Getting high ranks on the board ended up being the goal of lots of developers, little and big, and as designs have ended up being typically more powerful, ‘smarter,’ and enhanced for the specific tests of the first leaderboard, its outcomes have become less and less significant, hence the development of a 2nd variation.

Some LLMs, including more recent versions of Meta’s Llama, badly underperformed in the brand-new leaderboard compared to their high marks in the very first. This originated from a pattern of over-training LLMs just on the very first leaderboard’s benchmarks, resulting in regressing in real-world performance. This regression of performance, thanks to hyperspecific and self-referential information, follows a pattern of AI performance growing even worse in time, showing once again as Google’s AI answers have actually revealed that LLM performance is only as great as its training data and engel-und-waisen.de that true artificial “intelligence” is still many, several years away.

Remain on the Leading Edge: Get the Tom’s Hardware Newsletter

Get Tom’s Hardware’s best news and extensive evaluations, straight to your inbox.

Dallin Grimm is a contributing writer for Tom’s Hardware. He has actually been constructing and breaking computers since 2017, working as the resident child at Tom’s. From APUs to RGB, Dallin has a manage on all the most recent tech news.

GPUs presumably reveal ‘excellent’ reasoning performance with DeepSeek models

DeepSeek research study suggests Huawei’s Ascend 910C delivers 60% of Nvidia H100 reasoning performance

Asus and MSI hike RTX 5090 and RTX 5080 GPU prices by approximately 18%

-.

bit_user.

LLM performance is just as great as its training data which true synthetic “intelligence” is still numerous, numerous years away.

First, this statement discount rates the role of network architecture.

The definition of “intelligence” can not be whether something procedures details precisely like people do, or else the search for additional terrestrial intelligence would be completely useless. If there’s smart life out there, it probably doesn’t believe quite like we do. Machines that act and behave intelligently likewise needn’t necessarily do so, either.

Reply

-.

jp7189.

I do not love the click-bait China vs. the world title. The truth is qwen is open source, open weights and can be run anywhere. It can (and has currently been) tweaked to add/remove predisposition. I praise hugging face’s work to create standardized tests for LLMs, and for putting the focus on open source, open weights initially.

Reply

-.

jp7189.

bit_user said:.

First, this declaration discounts the role of network architecture.

Second, intelligence isn’t a binary thing – it’s more like a spectrum. There are numerous classes cognitive tasks and abilities you might be acquainted with, if you study kid advancement or animal intelligence.

The definition of “intelligence” can not be whether something processes details precisely like people do, or else the search for extra terrestrial intelligence would be entirely futile. If there’s smart life out there, it most likely doesn’t believe rather like we do. Machines that act and act wisely likewise needn’t always do so, either.

We’re creating a tools to help people, therfore I would argue LLMs are more useful if we grade them by human intelligence requirements.

Reply

– View All 3 Comments

Most Popular

Tomshardware belongs to Future US Inc, an international media group and leading digital publisher. Visit our business site.

– Terms and conditions.

– Contact Future’s specialists.

– Privacy policy.

– Cookies policy.

– Availability Statement.

– Advertise with us.

– About us.

– Coupons.

– Careers

© Future US, Inc. Full 7th Floor, 130 West 42nd Street, New York City, NY 10036.